Using InterSystems Cloud Manager

As of release 2023.3 of InterSystems IRIS, InterSystems Cloud Manager (ICM) is deprecated; it will be removed from future versions.

This page explains how to use ICM to deploy an InterSystems IRIS configuration in a public cloud, as follows. The sections in it explain the steps involved in using ICM to deploy a sample InterSystems IRIS configuration on AWS, as follows:

-

Review deployments based on two sample use cases that are used as examples on this page.

-

Use the docker run command with the ICM image provided by InterSystems to start the ICM container and open a command line.

-

Obtain the cloud provider and TLS credentials needed for secure communications by ICM.

-

Decide how many of each node type you want to provision and make other needed choices, then create the defaults.json and definitions.json configuration files needed for the deployment.

-

Use the icm provision command to provision the infrastructure and explore ICM’s infrastructure management commands. You can reprovision at any time to modify existing infrastructure, including scaling out or in.

-

Run the icm run command to deploy containers, and explore ICM’s container and service management commands. You can redeploy when the infrastructure has been reprovisioned or when you want to add, remove, or upgrade containers.

-

Run the icm unprovision command to destroy the deployment.

For comprehensive lists of the ICM commands and command-line options covered in detail in the following sections, see ICM Commands and Options.

ICM Use Cases

This page is focused on two typical ICM use cases, deploying the following two InterSystems IRIS configurations:

-

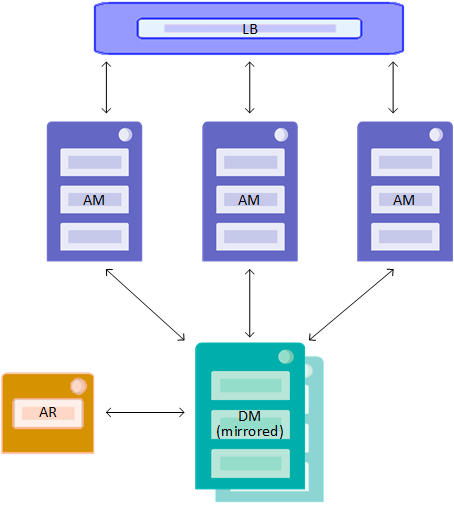

Distributed cache cluster — Mirrored data server, three application servers, arbiter node, and load balancer. This deployment is illustrated in the section Distributed Cache Cluster Definitions File.

-

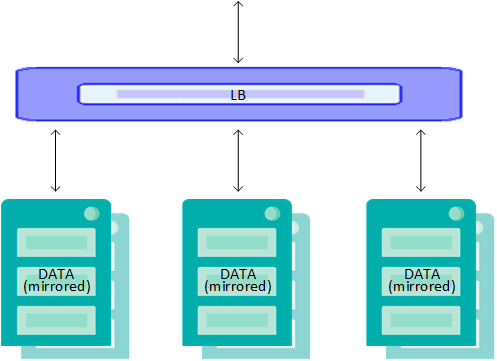

Basic sharded cluster — Three mirrored data nodes, arbiter node, and load balancer. This deployment is illustrated in Sharded Cluster Definitions File.

Most of the steps in the deployment process are the same for both configurations. The primary difference lies in the definitions files; see Define the Deployment for detailed contents. Output shown for the provisioning phase (The icm provision Command) is for the distributed cache cluster; output shown for the deployment phase (Deploy and Manage Services) is for the sharded cluster.

Launch ICM

ICM is provided as a Docker image. Everything required by ICM to carry out its provisioning, deployment, and management tasks — for example Terraform, the Docker client, and templates for the configuration files — is included in the ICM container. Therefore the only requirement for the Linux, macOS or Microsoft Windows system on which you launch ICM is that Docker is installed.

ICM is supported on Docker Enterprise Edition and Community Edition version 18.09 and later; Enterprise Edition only is supported for production environments.

Multiple ICM containers can be used to manage a single deployment, for example to make it possible for different people to execute different phases of the deployment process; for detailed information, see Sharing ICM Deployments.

Downloading the ICM Image

To use ICM, you need to download the ICM image to the system you are working on; this requires you to identify the registry from which you will download it and the credentials you need for access. Similarly, for ICM to deploy InterSystems IRIS and other InterSystems components, it requires this information for the images involved. The registry from which ICM downloads images must be accessible to the cloud provider you use (that is, not behind a firewall), and for security must require ICM to authenticate using the credentials you provide to it.

Thet InterSystems Container RegistryOpens in a new tab (ICR) includes repositories for all images available from InterSystems, including ICM and InterSystems IRIS images; Using the InterSystems Container RegistryOpens in a new tab provides detailed information about the available images and how to use the ICR. In addition, your organization may already store InterSystems images in its own private image registry; if so, obtain the location and the credentials needed to authenticate from the responsible administrator.

Once you are logged in to the ICR or your organization’s registry, you can use the docker pull command to download the image; the following example shows a pull from the ICR.

$ docker login containers.intersystems.com

Username: pmartinez

Password: **********

$ docker pull containers.intersystems.com/intersystems/icm:latest-em

5c939e3a4d10: Pull complete

c63719cdbe7a: Pull complete

19a861ea6baf: Pull complete

651c9d2d6c4f: Pull complete

$ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

containers.intersystems.com/intersystems/iris 2022.2.0.221.0 15627fb5cb76 1 minute ago 1.39GB

containers.intersystems.com/intersystems/sam 1.0.0.115 15627fb5cb76 3 days ago 1.33GB

acme/centos 7.3.1611 262f7381844c 2 weeks ago 192MB

acme/hello-world latest 05a3bd381fc2 2 months ag 1.84kB

For simplicity, the instructions in this document assume you are working with the InterSystems images from an InterSystems repository with a 2022.2.0.221.0 tag, for example intersystems/icm:latest-em.

Whether the images used by ICM are in the same registry or a different one, you will need to provide the image identifier, including registry, in the DockerImage field and the credentials needed to authenticate in the DockerUsername and DockerPassword fields for each image, as described in Docker Repositories.

Running the ICM Container

To launch ICM from the command line on a system on which Docker is installed, use the docker run command, which actually combines three separate Docker commands to do the following:

-

Download the ICM image from the repository if it is not already present locally; if it is present, it is updated if necessary (this step can be done separately with the docker pull command).

-

Creates a container from the ICM image (docker create command).

-

Start the ICM container (docker start command).

For example:

docker run --name icm --init -d -it --cap-add SYS_TIME intersystems/icm:latest-em

The -i option makes the command interactive and the -t option opens a pseudo-TTY, giving you command line access to the container. From this point on, you can interact with ICM by invoking ICM commands on the pseudo-TTY command line. The --cap-add SYS_TIME option allows the container to interact with the clock on the host system, avoiding clock skew that may cause the cloud service provider to reject API commands.

The ICM container includes a /Samples directory that provides you with samples of the elements required by ICM for provisioning, configuration, and deployment. The /Samples directory makes it easy for you to provision and deploy using ICM out of the box. Eventually, you can use locations outside the container to store these elements and InterSystems IRIS licenses, and either mount those locations as external volumes when you launch ICM (see Manage data in DockerOpens in a new tab in the Docker documentation) or copy files into the ICM container using the docker cp command.

Of course, the ICM image can also be run by custom tools and scripts, and this can help you accomplish goals such as making these external locations available within the container, and saving your configuration files and your state directory (which is required to remove the infrastructure and services you provision) to persistent storage outside the container as well. A script, for example, could do the latter by capturing the current working directory in a variable and using it to mount that directory as a storage volume when running the ICM container, as follows:

#!/bin/bash

clear

# extract the basename of the full pwd path

MOUNT=$(basename $(pwd))

docker run --name icm -d -it --volume $PWD:$MOUNT --cap-add SYS_TIME intersystems/icm:latest-em

printf "\nExited icm container\n"

printf "\nRemoving icm container...\nContainer removed: "

docker rm icm

You can mount multiple external storage volumes when running the ICM container (or any other). When deploying InterSystems IRIS containers, ICM automatically formats, partitions, and mounts several storage volumes; for more information, see Storage Volumes Mounted by ICM.

On a Windows host, you must enable the local drive on which the directory you want to mount as a volume is located using the Shared Drives option on the Docker Settings ... menu; see Using InterSystems IRIS Containers with Docker for WindowsOpens in a new tab on InterSystems Developer Community for additional requirements and general information about Docker for Windows.

When an error occurs during an ICM operation, ICM displays a message directing you to the log file in which information about the error can be found. Before beginning an ICM deployment, familiarize yourself with the log files and their locations as described in Log Files and Other ICM Files.

Upgrading an ICM Container

Distributed management mode, which allows different users on different systems to use ICM to manage with the same deployment, provides a means of upgrading an ICM container while preserving the needed state files of the deployment it is managing (see The State Directory and State Files). Because this is the recommended way to upgrade an ICM container that is managing a deployment, you may want to configure distributed management mode each time you use ICM, whether you intend to use distributed management or not, so that this option is available. For information about upgrading ICM in service discovery mode, see Upgrading ICM Using Distributed Management Mode.

Obtain Security-Related Files

ICM communicates securely with the cloud provider on which it provisions the infrastructure, with the operating system of each provisioned node, and with Docker and several InterSystems IRIS services following container deployment. Before defining your deployment, you must obtain the credentials and other files needed to enable secure communication.

Cloud Provider Credentials

To use ICM with one of the public cloud platforms, you must create an account and download administrative credentials. To do this, follow the instructions provided by the cloud provider; you can also find information about how to download your credentials once your account exists in the Provider-Specific Parameters. In the ICM configuration files, you identify the location of these credentials using the parameter(s) specific to the provider; for AWS, this is the Credentials parameter.

When using ICM with a vSphere private cloud, you can use an existing account with the needed privileges, or create a new one. You specify these using the Username and Password fields.

SSH and TLS Keys

ICM uses SSH to provide secure access to the operating system of provisioned nodes, and TLS to establish secure connections to Docker, InterSystems Web Gateway, and JDBC, and between nodes in InterSystems IRIS mirrors, distributed cache clusters, and sharded clusters. The locations of the files needed to enable this secure communication are specified using several ICM parameters, including:

-

SSHPublicKey

Public key of SSH public/private key pair used to enable secure connections to provisioned host nodes; in SSH2 format for AWS and OpenSSH format for other providers.

-

SSHPrivateKey

Private key of SSH public/private key pair.

-

TLSKeyDir

Directory containing TLS keys used to establish secure connections to Docker, InterSystems Web Gateway, JDBC, and mirrored InterSystems IRIS databases.

You can create these files, either for use with ICM, or to review them in order to understand which are needed, using two scripts provided with ICM, located in the directory /ICM/bin in the ICM container. The keygenSSH.sh script creates the needed SSH files and places them in the directory /Samples/ssh in the ICM container. The keygenTLS.sh script creates the needed TLS files and places them in /Samples/tls. You can then specify these locations when defining your deployment, or obtain your own files based on the contents of these directories.

For more information about the security files required by ICM and generated by the keygen* scripts, see ICM Security and Security-Related Parameters.

The keys generated by these scripts, as well as your cloud provider credentials, must be fully secured, as they provide full access to any ICM deployments in which they are used.

The keys by the keygen* scripts are intended as a convenience for your use in your initial test deployments. (Some have strings specific to InterSystems Corporation.) In production, the needed keys should be generated or obtained in keeping with your company's security policies.

Define the Deployment

To provide the needed parameters to ICM, you must select values for a number of fields, based on your goal and circumstances, and then incorporate these into the defaults and definitions files to be used for your deployment. You can begin with the template defaults.json and definitions.json files provided within in the ICM container in the /Samples directory tree, for example /Samples/AWS.

As noted in Configuration, State and Log Files, defaults.json is often used to provide shared settings for multiple deployments in a particular category, for example those that are provisioned on the same platform, while separate definitions.json files define the node types that must be provisioned and configured for each deployment. For example, the separate definitions files illustrated here define the two target deployments described at the start of this page: the distributed cache cluster includes two DM nodes as a mirrored data server, three load-balanced AM nodes as application servers, and an arbiter (AR) node, while the sharded cluster includes six DATA nodes configured as three load-balanced mirrored data nodes, plus an arbiter node. At the same time, the deployments can share a defaults.json file because they have a number of characteristics in common; for example, they are both on AWS. use the same credentials, provision in the same region and availability zone, and deploy the same InterSystems IRIS image.

While some fields (such as Provider) must appear in defaults.json and some (such as Role) in definitions.json, others can be used in either depending on your needs. In this case, for example, the InstanceType field appears in the shared defaults file and both definitions files, because the DM, AM, DATA, and AR nodes all require different compute resources; for this reason a single defaults.json setting, while establishing a default instance type, is not sufficient.

The following sections explain how you can customize the configuration of the InterSystems IRIS instances you deploy and review the contents of both the shared defaults file and the separate definitions files. Each field/value pair is shown as it would appear in the configuration file.

Bear in mind that ICM allows you to modify the definitions file of an existing deployment and then reprovision and/or redeploy to add or remove nodes or to alter existing nodes. For more information, see Reprovisioning the Infrastructure and Redeploying Services.

Both field names and values are case-sensitive; for example, to select AWS as the cloud provider you must include “Provider”:”AWS” in the defaults file, not “provider”:”AWS”, “Provider”:”aws”, and so on.

The fields included here represent a subset of the potentially applicable fields; see ICM Configuration Parameters for comprehensive lists of all required and optional fields, both general and provider-specific.

Shared Defaults File

The field/value pairs shown in the table in this section represent the contents of a defaults.json file that can be used for both the distributed cache cluster deployment and the sharded cluster deployment. As described at the start of this section, this file can be created by making a few modifications to the /Samples/AWS/default.json file, which is illustrated in the following:

{

"Provider": "AWS",

"Label": "Sample",

"Tag": "TEST",

"DataVolumeSize": "10",

"SSHUser": "ubuntu",

"SSHPublicKey": "/Samples/ssh/insecure-ssh2.pub",

"SSHPrivateKey": "/Samples/ssh/insecure",

"DockerRegistry": "https://containers.intersystems.com",

"DockerImage": "containers.intersytems.com/intersystems/iris:some-tag",

"DockerUsername": "xxxxxxxxxxxx",

"DockerPassword": "xxxxxxxxxxxx",

"TLSKeyDir": "/Samples/tls/",

"LicenseDir": "/Samples/license/",

"Region": "us-east-1",

"Zone": "us-east-1a",

"AMI": "ami-07267eded9a267f32",

"DockerVersion": "5:20.10.17~3-0~ubuntu-jammy",

"InstanceType": "m5.large",

"Credentials": "/Samples/AWS/sample.credentials",

"ISCPassword": "",

"Mirror": "false",

"UserCPF": "/Samples/cpf/iris.cpf"

}

The order of the fields in the table matches the order of this sample defaults file.

In the defaults file for a different provider, some of the fields have different provider-specific values, while others are replaced by different provider-specific fields. For example, in the Tencent defaults file, the value for InstanceType is S2.MEDIUM4, a Tencent-specific instance type that would be invalid on AWS, while the AWS AMI field is replaced by the equivalent Tencent field, ImageId. You can review these differences by examining the varying defaults.json files in the /Samples directory tree and referring to the General Parameters and Provider-Specific Parameters tables.

The pathnames provided in the fields specifying security files in this sample defaults file assume you have placed your AWS credentials in the /Samples/AWS directory and used the keygen*.sh scripts to generate the keys as described in Obtain Security-Related Files. If you have generated or obtained your own keys, these may be replaced by internal paths to external storage volumes mounted when the ICM container is run, as described in the Launch ICM. For additional information about these files, see ICM Security and Security-Related Parameters.

| Shared characteristic | /Samples/AWS/defaults.json | Customization example | Customization explanation |

|---|---|---|---|

| Platform to provision infrastructure on, in this case Amazon Web Services; see Provisioning Platforms. | "Provider": "AWS", | n/a | If value is changed to GCP, Azure, Tencent, vSphere, or PreExisting, different fields and values from those shown here are required. |

|

Naming pattern for provisioned nodes is Label-Role-Tag-NNNN, where Role is the value of the Role field in the definitions file, for example ANDY-DATA-TEST-0001. Modify these so that node names indicate ownership and purpose. |

"Label": "Sample", "Tag": "TEST", |

"Label": "ANDY", "Tag": "TEST", |

Update to identify owner. |

| Size (in GB) of the persistent data volume to create for InterSystems IRIS containers; see Storage Volumes Mounted by ICM. Can be overridden for specific node types in the definitions file. | “DataVolumeSize”: “10”, |

“DataVolumeSize”:”250”, |

If all deployments using the defaults file consist of sharded cluster (DATA) nodes only, enlarging the default size of the data volume is recommended. |

|

Nonroot account with sudo access, used by ICM for SSH access to provisioned nodes. On AWS, the required value depends on the AMI, but is typically ubuntu for Ubuntu AMIs; see Security-Related Parameters. |

"SSHUser": "ubuntu", |

n/a | If value is changed to GCP, Azure, Tencent, vSphere, or PreExisting, different fields and values from those shown here are required. |

|

Locations of needed security key files; see Obtain Security-Related Files and Security-Related Parameters. Because provider is AWS, the SSH2–format public key in /Samples/ssh/ is specified. |

"SSHPublicKey": "/Samples/ssh/secure-ssh2.pub", "SSHPrivateKey": "/Samples/ssh/secure-ssh2", "TLSKeyDir": "/Samples/tls/", |

"SSHPublicKey": "/mydir/keys/mykey.pub", “SSHPrivateKey": "/mydir/keys/mykey.ppk", "TLSKeyDir": "/mydir/keys/tls/", |

If you stage your keys on a mounted external volume, update the paths to reflect this. |

| The Docker version to be installed on provisioned nodes; typically you can keep the default value. |

"DockerVersion": "5:20.10.5~3-0~ubuntu-bionic", |

"DockerVersion": "18.06.1~ce~3-0~ubuntu", | The version in each /Samples/.../defaults.json is generally correct for the platform. However. if your organization uses a different version of Docker, you may want that version installed on the cloud nodes instead. |

|

The Docker image to deploy on provisioned nodes; see Docker Repositories, The icm run Command, and General Parameters. This field can also be included in a node definition in definitions.json, overriding the defaults file value, as illustrated in Distributed Cache Cluster Definitions File. |

"DockerImage": "intersystems/iris:stable", |

“DockerImage”: “acme/iris:latest-em" | If you pushed the InterSystems IRIS image to your organization’s registry, update the image spec.

Note: InterSystems IRIS images for standard platforms are named iris; those for ARM platforms are named iris-arm64. |

| Credentials to log in to the Docker registry in which the image specified by the previous field is stored; see Downloading the ICM Image. |

"DockerUsername": "xxxxxxxxxxxx", "DockerPassword": "xxxxxxxxxxxx", |

"DockerUsername": "AndyB", "DockerPassword": "password", |

Update to use your Docker credentials for the specified registry. |

| Location of InterSystems IRIS license keys staged in the ICM container and individually specified by the LicenseKey fields in the definitions file; see InterSystems IRIS Licensing for ICM. | “LicenseDir”: “/Samples/Licenses”, |

“LicenseDir”: “/mydir/licenses”, |

If you stage your licenses on a mounted external volume, update the paths to reflect this. |

| Geographical region of provider’s compute resources in which infrastructure is to be provisioned; see General Parameters. | "Region": "us-west-1", | "Region": "us-east-2", | If you want to provision in another valid combination of region and availability zone, update the values to reflect this. |

| Availability zone within specified region in which to locate provisioned nodes; see General Parameters. | "Zone": "us-west-1c", | "Zone": "us-east-2a", | |

| AMI to use as platform and OS template for nodes to be provisioned; see Amazon Web Services (AWS) Parameters. | "AMI": "ami-0121ef35996ede438", | “AMI”: “ami-e24b7d9d”, | If you want to provision from another valid combination of AMI and instance type, update the values to reflect this. |

|

Instance type to use as compute resources template for nodes to be provisioned; see Amazon Web Services (AWS) Parameters. |

"InstanceType": "m4.large", | "InstanceType": "m5ad.large", | |

|

Credentials for AWS account; see Amazon Web Services (AWS) Parameters. |

"Credentials": "/Samples/AWS/sample.credentials", |

“Credentials”: “/mydir/aws-credentials”, | If you stage your credentials on a mounted external volume, update the path to reflect this. |

|

Password for deployed InterSystems IRIS instances. Recommended approach is to specify on the deployment command line (see Deploy and Manage Services) to avoid displaying password in a configuration file |

“ISCPassword”: "", |

(delete) | Remove in favor of specifying password by using -password option of icm run command. |

|

Whether specific node types (including DM and DATA) defined in even numbers are deployed as mirrors (see Rules for Mirroring). |

"Mirror": "true" |

n/a | Both deployments are mirrored. |

| The configuration merge file to be used to override initial CPF settings for deployed InterSystems IRIS instances (see Deploying with Customized InterSystems IRIS Configurations) . | "UserCPF": "/Samples/cpf/iris.cpf" | Remove unless you are familiar with the configuration merge feature and CPF settings (see Automating Configuration of InterSystems IRIS with Configuration Merge). |

The major versions of the image from which you launched ICM and the InterSystems IRIS image you specify using the DockerImage field must match; for example, you cannot deploy a 2022.2 version of InterSystems IRIS using a 2022.1 version of ICM. For information about upgrading ICM before you upgrade your InterSystems containers, see Upgrading ICM Using Distributed Management Mode.

Distributed Cache Cluster Definitions File

The definitions.json file for the distributed cache cluster must define the following nodes:

-

Two data servers (role DM), configured as a mirror

-

Three application servers (role AM)

-

Load balancer for application servers

-

Arbiter node for data server mirror

This configuration is illustrated in the following:

The table that follows lists the field/value pairs that are required for this configuration.

A standalone InterSystems IRIS instance, nonmirrored or mirrored—that is, a DM node or two DM nodes forming a mirror—can be deployed with a standard license, as can a distributed cache cluster (DM node or mirrored DM nodes plus AM nodes). In all sharded cluster configurations, node-level or namespace-level and nonmirrored or mirrored, all nodes on which an InterSystems IRIS container is deployed require a sharding-enabled InterSystems IRIS license. For example, if a nonmirrored or mirrored standalone instance with a standard license has DS nodes added to it, the license used for all of the nodes must be upgraded to a sharding license.

| Definition | Field: Value |

|---|---|

|

Two data servers (DM) using a standard InterSystems IRIS license, configured as a mirror because “Mirror”: “true” in shared defaults file. Instance type, OS volume size, data volume size override settings in defaults file to meet data server resource requirements. |

"Role": "DM", "Count": "2", "LicenseKey": "ubuntu-standard-iris.key,” "InstanceType": "m4.xlarge", "OSVolumeSize": "32", "DataVolumeSize": "150", |

|

Three application servers (AM) using a standard InterSystems IRIS license. Numbering in node names starts at 0003 to follow DM nodes 0001 and 0002. Load balancer for application servers is automatically provisioned. |

"Role": "AM", "Count": "3", "LicenseKey": "ubuntu-standard-iris.key”, "StartCount": "3", "LoadBalancer": "true", |

|

One arbiter (AR) for data server mirror, no license required, use of arbiter image overrides InterSystems IRIS image specified in defaults file. Node is numbered 0006. Instance type overrides defaults file because arbiter requires only limited resources. |

"Role": “AR”, "Count": "1", "DockerImage": "intersystems/arbiter:latest-em", "StartCount": "6", "InstanceType": "t2.small", |

A definitions.json file incorporating the settings in the preceding table would look like this:

[

{

"Role": "DM",

"Count": "2",

"LicenseKey": "ubuntu-standard-iris.key”,

"InstanceType": "m4.xlarge",

"OSVolumeSize": "32",

"DataVolumeSize": "150"

},

{

"Role": "AM",

"Count": "3",

"LicenseKey": "ubuntu-standard-iris.key”,

"StartCount": "3",

"LoadBalancer": "true"

},

{

"Role": "AR",

"Count": "1",

"DockerImage": "intersystems/arbiter:latest-em",

"StartCount": "6",

"InstanceType": "t2.small"

}

]

Sharded Cluster Definitions File

The definitions.json file for the sharded cluster configuration must define three load-balanced mirrored DATA nodes. This is illustrated in the following:

The table that follows lists the field/value pairs that are required for this configuration.

| Definition | Field: Value |

|---|---|

|

"Role": "DATA" "Count": "6" "LicenseKey": "ubuntu-sharding-iris.key” "InstanceType": "m4.4xlarge" “DataVolumeSize": "250" "LoadBalancer": "true" |

|

"Role": “AR” "Count": "1" "DockerImage": "intersystems/arbiter:latest-em" "StartCount": "7" "InstanceType": "t2.small" |

A definitions.json file incorporating the settings in the preceding table would look like this:

[

{

"Role": "DATA",

"Count": "6",

"LicenseKey": "sharding-iris.key”,

"InstanceType": "m4.xlarge",

"DataVolumeSize": "250",

"LoadBalancer": "true"

},

{

"Role": "AR",

"Count": "1",

"DockerImage": "intersystems/arbiter:latest-em",

"StartCount": "7",

"InstanceType": "t2.small"

}

]

The DATA and COMPUTE node types were added to ICM in Release 2019.3 to support the node-level sharding architecture. Previous versions of this document described the namespace-level sharding architecture, which involves a different, larger set of node types. The namespace-level architecture remains in place as the transparent foundation of the node-level architecture and is fully compatible with it, and the node types used to deploy it are still available in ICM. For information about all available node types, see ICM Node Types.

For more detailed information about the specifics of deploying a sharded cluster, such as database cache size and data volume size requirements, see Deploying the Sharded Cluster.

The recommended best practice is to load-balance application connections across all of the data nodes in a cluster.

Customizing InterSystems IRIS Configurations

Every InterSystems IRIS instance, including those running in the containers deployed by ICM, is installed with a predetermined set of configuration settings, recorded in its configuration parameters file (CPF). You can use the UserCPF field in your defaults file (as illustrated in the /Samples/AWS/defaults.json file in Shared Defaults File) to specify a configuration merge file, allowing you to override one or more of these configuration settings for all of the InterSystems IRIS instances you deploy, or in your definitions file to override different settings for different node types, such as the DM and AM nodes in a distributed cache cluster or the DATA and COMPUTE nodes in a sharded cluster. For example, as described in Planning an InterSystems IRIS Sharded Cluster, you might want to adjust the size of the database caches on the data nodes in a sharded cluster, which you could do by overriding the value of the [config]/globals CPF setting for the DATA definitions only. For information about using a merge file to override initial CPF settings, see Deploying with Customized InterSystems IRIS Configurations.

A simple configuration merge file is provided in the /Samples/cpf directory in the ICM container, and the sample defaults files in all of the /Samples provider subdirectories include the UserCPF field, pointing to this file. Remove UserCPF from your defaults file unless you are sure you want to merge its contents into the default CPFs of deployed InterSystems IRIS instances.

Information about InterSystems IRIS configuration settings, their effects, and their installed defaults is provided in the Installation Guide, the System Administration Guide, and the Configuration Parameter File Reference.

Provision the Infrastructure

ICM provisions cloud infrastructure using the HashiCorp Terraform tool.

ICM can deploy containers on existing cloud, virtual or physical infrastructure; see Deploying on a Preexisting Cluster for more information.

The icm provision Command

The icm provision command allocates and configures host nodes, using the field values provided in the definitions.json and defaults.json files (as well as default values for unspecified parameters where applicable). The input files in the current working directory are used, and during provisioning, the state directory, instances.json file, and log files are created in the same directory (for details on these files see Configuration, State and Log Files).

Because the state directory and the generated instances.json file (which serves as input to subsequent reprovisioning, deployment, and management commands) are unique to a deployment, setting up znd working in a directory for each deployment is generally the simplest effective approach. For example, in the scenario described here, in which two different deployments share a defaults file, the simplest approach would be to set up one deployment in a directory such as /Samples/AWS, as shown in Shared Defaults File, then copy that entire directory (for example to /Samples/AWS2) and replace the first definitions.json file with the second.

While the provisioning operation is ongoing, ICM provides status messages regarding the plan phase (the Terraform phase that validates the desired infrastructure and generates state files) and the apply phase (the Terraform phase that accesses the cloud provider, carries out allocation of the machines, and updates state files). Because ICM runs Terraform in multiple threads, the order in which machines are provisioned and in which additional actions applied to them is not deterministic. This is illustrated in the sample output that follows.

At completion, ICM also provides a summary of the host nodes and associated components that have been provisioned, and outputs a command line which can be used to unprovision (delete) the infrastructure at a later date.

The following example is excerpted from the output of provisioning of the distributed cache cluster described in Define the Deployment.

$ icm provision

Starting init of ANDY-TEST...

...completed init of ANDY-TEST

Starting plan of ANDY-DM-TEST...

...

Starting refresh of ANDY-TEST...

...

Starting apply of ANDY-DM-TEST...

...

Copying files to ANDY-DM-TEST-0002...

...

Configuring ANDY-AM-TEST-0003...

...

Mounting volumes on ANDY-AM-TEST-0004...

...

Installing Docker on ANDY-AM-TEST-0003...

...

Installing Weave Net on ANDY-DM-TEST-0001...

...

Collecting Weave info for ANDY-AR-TEST-0006...

...

...collected Weave info for ANDY-AM-TEST-0005

...installed Weave Net on ANDY-AM-TEST-0004

Machine IP Address DNS Name Region Zone

------- ---------- -------- ------ ----

ANDY-DM-TEST-0001+ 00.53.183.209 ec2-00-53-183-209.us-west-1.compute.amazonaws.com us-west-1 c

ANDY-DM-TEST-0002- 00.53.183.185 ec2-00-53-183-185.us-west-1.compute.amazonaws.com us-west-1 c

ANDY-AM-TEST-0003 00.56.59.42 ec2-00-56-59-42.us-west-1.compute.amazonaws.com us-west-1 c

ANDY-AM-TEST-0005 00.67.1.11 ec2-00-67-1-11.us-west-1.compute.amazonaws.com us-west-1 c

ANDY-AM-TEST-0003 00.193.117.217 ec2-00-193-117-217.us-west-1.compute.amazonaws.com us-west-1 c

ANDY-LB-TEST-0002 (virtual AM) ANDY-AM-TEST-1546467861.amazonaws.com us-west-1 c

ANDY-AR-TEST-0006 00.53.201.194 ec2-00-53-201-194.us-west-1.compute.amazonaws.com us-west-1 c

To destroy: icm unprovision [-cleanUp] [-force]

Unprovisioning public cloud host nodes in a timely manner avoids unnecessary expense.

Interactions with cloud providers sometimes involve high latency leading to timeouts and internal errors on the provider side, and errors in the configuration files can also cause provisioning to fail. Because the icm provision command is fully reentrant, it can be issued multiple times until ICM completes all the required tasks for all the specified nodes without error. For more information, see the next section, Reprovisioning the Infrastructure.

Reprovisioning the Infrastructure

To make the provisioning process as flexible and resilient as possible, the icm provision command is fully reentrant — it can be issued multiple times for the same deployment. There are two primary reasons for reprovisioning infrastructure by executing the icm provision command more than once, as follows:

-

Overcoming provisioning errors

Interactions with cloud providers sometimes involve high latency leading to timeouts and internal errors on the provider side, If errors are encountered during provisioning, the command can be issued multiple times until ICM completes all the required tasks for all the specified nodes without error.

-

Modifying provisioned infrastructure

When your needs change, you can modify infrastructure that has already been provisioned, including configurations on which services have been deployed, at any time by changing the characteristics of existing nodes, adding nodes, or removing nodes.

When you repeat the icm provision command following an error, if the working directory does not contain the configuration files, you must repeat any location override options, this file does not yet exist, so you must use the -stateDir option to specify the incomplete infrastructure you want to continue provisioning. When you repeat the command to modify successfully provisioned infrastructure, however, you do not need to do so; as long as you are working in the directory containing the instances.json file, it is automatically used to identify the infrastructure you are reprovisioning. This is shown in the sections that follow.

Overcoming Provisioning Errors

When you issue the icm provision command and errors prevent successful provisioning, the state directory is created, but the instances.json file is not. Simply issue the icm provision command again, using the-stateDir option to specify the state subdirectory’s location if it is not in the current working directory. This indicates that provisioning is incomplete and provides the needed information about what has been done and what hasn’t. For example, suppose you encounter the problem in the following:

$ icm provision

Starting plan of ANDY-DM-TEST...

...completed plan of ANDY-DM-TEST

Starting apply of ANDY-AM-TEST...

Error: Thread exited with value 1

See /Samples/AWS/state/Sample-DS-TEST/terraform.err

Review the indicated errors, fix as needed, then run icm provision again in the same directory:

$ icm provision

Starting plan of ANDY-DM-TEST...

...completed plan of ANDY-DM-TEST

Starting apply of ANDY-DM-TEST...

...completed apply of ANDY-DM-TEST

[...]

To destroy: icm unprovision [-cleanUp] [-force]

Modifying Provisioned Infrastructure

At any time following successful provisioning — including after successful services deployment using the icm run command — you can alter the provisioned infrastructure or configuration by modifying your definitions.json file and executing the icm provision command again. If changing a deployed configuration, you would then execute the icm run command again, as described in Redeploying Services.

You can modify existing infrastructure or a deployed configuration in the following ways.

-

To change the characteristics of one or more nodes, change settings within the node definitions in the definitions file. You might want to do this to vertically scale the nodes; for example, in the following definition, you could change the DataVolumeSize setting (see General Parameters) to increase the sizes of the DM nodes’ data volumes:

{ "Role": "DM", "Count": "2", "LicenseKey": "standard-iris.key”, "InstanceType": "m4.xlarge", "OSVolumeSize": "32", "DataVolumeSize": "25" },Caution:Modifying attributes of existing nodes such as changing disk sizes, adding CPUs, and so on may cause those nodes (including their persistent storage) to be recreated. This behavior is highly specific to each cloud provider, and caution should be used to avoid the possibility of corrupting or losing data.

Important:Changes to the Label and Tag fields in the definitions.json file are not supported when reprovisioning.

-

To add nodes, modify the definitions.json file in one or more of the following ways:

-

Add a new node type by adding a definition. For example, if you have deployed a sharded cluster with data nodes only, you can add compute nodes by adding an appropriate COMPUTE node definition to the definitions file.

-

Add more of an existing node type by increasing the Count specification in its definition. For example, to add two more application servers to a distributed cache cluster that already has two, you would modify the AM definition by changing “Count”: “2” to “Count”: “4”. When you add nodes to existing infrastructure or a deployed configuration, existing nodes are not restarted or modified, and their persistent storage remains intact.

Note:When you add data nodes to a deployed sharded cluster after it has been loaded with data, you can automatically redistribute the sharded data across the new servers (although this must be done with the cluster offline); for more information, see Add Shard Data Servers and Rebalance Data.

Generally, there are many application-specific attributes that cannot be modified by ICM and must be modified manually after adding nodes.

-

Add a load balancer by adding “LoadBalancer": "true" to DATA, COMPUTE, AM, or WS node definitions.

-

-

To remove nodes, decrease the Count specification in the node type definition. To remove all nodes of a given type, reduce the count to 0.

Caution:Do not remove a definition entirely; Terraform will not detect the change and your infrastructure or deployed configuration will include orphaned nodes that ICM is no longer tracking.

Important:When removing one or more nodes, you cannot choose which to remove; rather nodes are unprovisioned on a last in, first out basis, so the most recently created node is the first one to be removed. This is especially significant when you have added one or more nodes in a previous reprovisioning operation, as these will be removed before the originally provisioned nodes.

You can also remove load balancers by removing “LoadBalancer": "true" from a node definition, or changing the value to false.

There are some limitations to modifying an existing configuration through reprovisioning, as follows:

-

You cannot remove nodes that store data — DATA, DM, or DS.

-

You cannot change a configuration from nonmirrored to mirrored, or the reverse.

-

You can add DATA, DS, or DM nodes to a mirrored configuration only in numbers that match the relevant MirrorMap setting, as described in Rules for Mirroring. For example, if the MirrorMap default value of primary,backup is in effect, DATA and DS nodes can be added in even numbers (multiples of two) only to be configured as additional failover pairs, and DM nodes cannot be added; if MirrorMap is primary,backup,async, DATA or DS nodes can be added in multiples of three to be configured as additional three-member mirrors, or as a pair to be configured as an additional mirror with no async, and a DM node can be added only if the existing DM mirror does not currently include an async.

-

You can add an arbiter (AR node) to a mirrored configuration, but it must be manually configured as arbiter, using the management portal or ^MIRROR routine, for each mirror in the configuration.

By default, when issuing the icm provision command to modify existing infrastructure, ICM prompts you to confirm; you can avoid this, for example when using a script, by using the -force option.

Remember that after reprovisioning a deployed configuration, you must issue the icm run command again to redeploy.

Infrastructure Management Commands

The commands in this section are used to manage the infrastructure you have provisioned using ICM.

Many ICM command options can be used with more than one command. For example, the -role option can be used with a number of commands to specify the of the nodes for which the command should be run — for example, icm inventory -role AM lists only the nodes in the deployment that are of type AM — and the -image option, which specifies an image from which to deploy containers for both the icm run and icm upgrade commands. For complete lists of ICM commands and their options, see ICM Commands and Options.

icm inventory

The icm inventory command lists the provisioned nodes, as at the end of the provisioning output, based on the information in the instances.json file (see The Instances File). For example:

$ icm inventory

Machine IP Address DNS Name Region Zone

------- ---------- -------- ------ ----

ANDY-DM-TEST-0001+ 00.53.183.209 ec2-00-53-183-209.us-west-1.compute.amazonaws.com us-west-1 c

ANDY-DM-TEST-0002- 00.53.183.185 ec2-00-53-183-185.us-west-1.compute.amazonaws.com us-west-1 c

ANDY-AM-TEST-0003 00.56.59.42 ec2-00-56-59-42.us-west-1.compute.amazonaws.com us-west-1 c

ANDY-AM-TEST-0005 00.67.1.11 ec2-00-67-1-11.us-west-1.compute.amazonaws.com us-west-1 c

ANDY-AM-TEST-0003 00.193.117.217 ec2-00-193-117-217.us-west-1.compute.amazonaws.com us-west-1 c

ANDY-LB-TEST-0002 (virtual AM) ANDY-AM-TEST-1546467861.amazonaws.com us-west-1 c

ANDY-AR-TEST-0006 00.53.201.194 ec2-00-53-201-194.us-west-1.compute.amazonaws.com us-west-1 c

When mirrored nodes are part of a configuration, initial mirror failover assignments are indicated by a + (plus) following the machine name of each intended primary and a - (minus) following the machine name of each intended backup, as shown in the preceding example. These assignments can change, however; following deployment, use the icm ps command to display the mirror member status of the deployed nodes.

You can also use the -machine or -role options to filter by node name or role, for example, with the same cluster as in the preceding example:

$ icm inventory -role AM

Machine IP Address DNS Name Region Zone

------- ---------- -------- ------ ----

ANDY-AM-TEST-0003 00.56.59.42 ec2-00-56-59-42.us-west-1.compute.amazonaws.com us-west-1 c

ANDY-AM-TEST-0005 00.67.1.11 ec2-00-67-1-11.us-west-1.compute.amazonaws.com us-west-1 c

ANDY-AM-TEST-0003 00.193.117.217 ec2-00-193-117-217.us-west-1.compute.amazonaws.com us-west-1 c

If the fully qualified DNS names from the cloud provider are too wide for a readable display, you can use the -options option with the Docker wide argument to make the output wider, for example:

icm inventory -options wide

For more information on the -options option, see Using ICM with Custom and Third-Party Containers.

icm ssh

The icm ssh command runs an arbitrary command on the specified host nodes. Because mixing output from multiple commands would be hard to interpret, the output is written to files and a list of output files provided, for example:

$ icm ssh -command "ping -c 5 intersystems.com" -role DM

Executing command 'ping -c 5 intersystems.com' on ANDY-DM-TEST-0001...

Executing command 'ping -c 5 intersystems.com' on ANDY-DM-TEST-0002...

...output in ./state/ANDY-DM-TEST/ANDY-DM-TEST-0001/ssh.out

...output in ./state/ANDY-DM-TEST/ANDY-DM-TEST-0002/ssh.out

However, when the -machine and/or -role options are used to specify exactly one node, as in the following, or there is only one node, the output is also written to the console:

$ icm ssh -command "df -k" -machine ANDY-DM-TEST-0001

Executing command 'df -k' on ANDY-DM-TEST-0001...

...output in ./state/ANDY-DM-TEST/ANDY-DM-TEST-0001/ssh.out

Filesystem 1K-blocks Used Available Use% Mounted on

rootfs 10474496 2205468 8269028 22% /

tmpfs 3874116 0 3874116 0% /dev

tmpfs 3874116 0 3874116 0% /sys/fs/cgroup

/dev/xvda2 33542124 3766604 29775520 12% /host

/dev/xvdb 10190100 36888 9612540 1% /irissys/data

/dev/xvdc 10190100 36888 9612540 1% /irissys/wij

/dev/xvdd 10190100 36888 9612540 1% /irissys/journal1

/dev/xvde 10190100 36888 9612540 1% /irissys/journal2

shm 65536 492 65044 1% /dev/shm

The icm ssh command can also be used in interactive mode to execute long-running, blocking, or interactive commands on a host node. Unless the command is run on a single-node deployment, the -interactive flag must be accompanied by a -role or -machine option restricting the command to a single node. If the -command option is not used, the destination user's default shell (for example bash) is launched.

See icm exec for an example of running a command interactively.

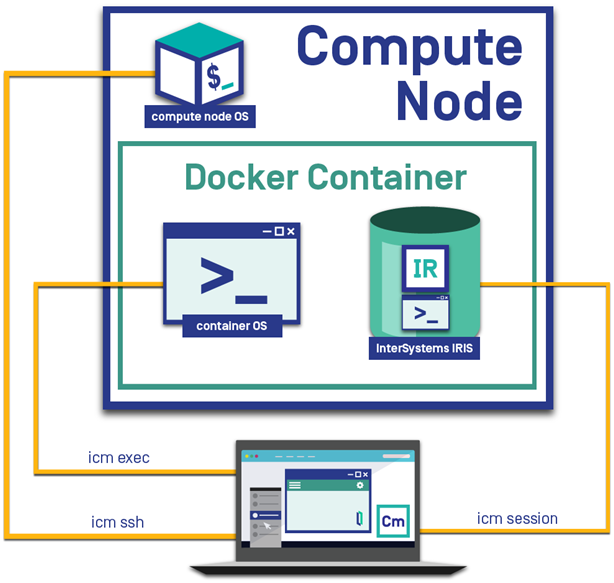

Two commands described in Service Management Commands, icm exec (which runs an arbitrary command on the specified containers) and icm session (which opens an interactive session for the InterSystems IRIS instance on a specified node) can be grouped with icm ssh as a set of powerful tools for interacting with your ICM deployment. The icm scp command, which securely copies a file or directory from the local ICM container to the host OS of the specified node or nodes, is frequently used with icm ssh.

icm scp

The icm scp command securely copies a file or directory from the local ICM container to the local file system of the specified node or nodes. The command syntax is as follows:

icm scp -localPath local-path [-remotePath remote-path]

Both localPath and remotePath can be either files or directories. If remotePath is a directory, it must contain a trailing forward slash (/), or it will be assumed to be a file. If both are directories, the contents of the local directory are recursively copied; if you want the directory itself to be copied, remove the trailing slash (/) from localPath.

The user specified by the SSHUser field must have the needed permissions for the host file system location specified by the optional remote-path argument. The default for remote-path is $HOME as defined in the host OS.

See also the icm cp command, which copies a local file or directory on the specified node into the specified container, or from the container onto the local file system.

Deploy and Manage Services

ICM carries out deployment of software services using Docker images, which it runs as containers by making calls to Docker. Containerized deployment using images supports ease of use and DevOps adaptation while avoiding the risks of manual upgrade. In addition to Docker, ICM also carries out some InterSystems IRIS-specific configuration over JDBC.

There are many container management and orchestration tools available, and these can be used to extend ICM’s deployment and management capabilities.

The icm run Command

The icm run command pulls, creates, and starts a container from the specified image on each of the provisioned nodes. By default, the image specified by the DockerImage field in the configuration files is used, and the name of the deployed container is iris. This name is reserved for and should be used only for containers created from the following InterSystems images (or images based on them), which are available from the InterSystems Container Registry, as described in Using the InterSystems Container RegistryOpens in a new tab.

-

iris — Contains an instance of InterSystems IRIS.

The InterSystems IRIS images distributed by InterSystems, and how to use one as a base for a custom image that includes your InterSystems IRIS-based application, are described in detail in Running InterSystems IRIS Containers.

InterSystems IRIS images are deployed by ICM as DATA. COMPUTE, DM, AM, DS, and QS nodes. When deploying the iris image, you can override one or more InterSystems IRIS configuration settings for all of the iris containers you deploy, or override different settings for containers deployed on different node types; for more information, see Deploying with Customized InterSystems IRIS Configurations.

-

iris-lockeddown — Contains an instance of InterSystems IRIS installed with Locked Down security. Use this image to support the strictest security requirements by deploying a highly secure InterSystems IRIS container. The differences between containers from this image and those from the standard iris image are detailed in Locked Down InterSystems IRIS Container.

Important:Be sure to review the documentation for the iris-lockeddown image before using it to deploy InterSystems IRIS containers.

In InterSystems IRIS containers deployed from the iris-lockeddown image, the instance web server is disabled, which also disables the Management Portal. To enable the Management Portal for such containers, so you can use it to connect to the deployment as described at the end of this section, add the webserver property with the value 1 (webserver=1) to a configuration merge file specified by the UserCPF property, as described in Deploying with Customized InterSystems IRIS Configuration Parameters.

Note:As an additional security measure, in containerless mode, ICM can install nonroot instances of InterSystems IRIS, that is, instances installed and owned by a user without root privileges.

-

iris-ml — Contains an instance of InterSystems IRIS with the IntergratedML feature, which allows you to use automated machine learning functions directly from SQL to create and use predictive models.

-

irishealth, irishealth-lockeddown, irishealth-ml — Contain instances of InterSystems IRIS for HealthOpens in a new tab®, a complete healthcare platform built around InterSystems IRIS that enables the rapid development and deployment of data-rich and mission-critical healthcare applications. The Locked Down and IntegratedML options are as described above for InterSystems IRIS. InterSystems IRIS for Health images are deployed by ICM in the same manner as InterSystems IRIS images.

-

webgateway, webgateway-lockeddown, webgateway-nginx — Contain an InterSystems Web Gateway instance along with an Apache or Nginx web server; the webgateway-lockeddown image is similar to the locked down InterSystems IRIS images. For information about these image, see Using the InterSystems Web Gateway Container. The webgateway images are deployed by ICM as WS nodes, which are configured as a web server for DATA or DATA and COMPUTE nodes in a node-level sharded cluster, AM or DM nodes in other configurations.

-

arbiter — Contains an ISCAgent instance to act as mirror arbiter. For information about using this image, see Mirroring with InterSystems IRIS Containers. The arbiter image is deployed on an AR node, which is configured as the arbiter host in a mirrored deployment. For more information on mirrored deployment and topology, see ICM Cluster Topology.

-

iam — Contains the InterSystems API Manage, which ICM deploys as a CN node; for more information, see Deploying InterSystems API Manager.

-

sam — Contains the SAM Manager component of the System Alerting and Monitoring (SAM) cluster monitoring solution, which ICM deploys as a SAM node; for more information, see Monitoring in ICM.

All of the images in the preceding list except sam are available for ARM platforms, for example iris-arm64.

By including the DockerImage field in each node definition in the definitions.json file, you can run different InterSystems IRIS images on different node types. For example, you must do this to run the arbiter image on the AR node and the webgateway image on WS nodes while running the iris image on the other nodes. For a list of node types and corresponding InterSystems images, see ICM Node Types.

If the wrong InterSystems image is specified for a node by the DockerImage field or the -image option of the icm run command — for example, if the iris image is specified for an AR (arbiter) node, or any InterSystems image for a CN node — deployment fails, with an appropriate message from ICM. Therefore, when the DockerImage field specifies the iris image in the defaults.json file and you include an AR or WS definition in the definitions.json file, you must use include the DockerImage field in the AR or WS definition to override the default and specify the appropriate image (arbiter or webgateway, respectively).

The major versions of the image from which you launched ICM and the InterSystems images you specify using the DockerImage field must match; for example, you cannot deploy a 2022.2 InterSystems IRIS image using a 2022.1 ICM image. For information about upgrading ICM before you upgrade your InterSystems containers, see Upgrading ICM Using Distributed Management Mode.

Container images from InterSystems comply with the Open Container Initiative (OCIOpens in a new tab) specification, and are built using the Docker Enterprise Edition engine, which fully supports the OCI standard and allows for the images to be certifiedOpens in a new tab and featured in the Docker Hub registry.

InterSystems images are built and tested using the widely popular container Ubuntu operating system, and are therefore supported on any OCI-compliant runtime engine on Linux-based operating systems, both on premises and in public clouds.

To learn how to quickly get started running an InterSystems IRIS container on the command line, see InterSystems IRIS Basics: Running an InterSystems IRIS Container; for detailed information about deploying InterSystems IRIS and InterSystems IRIS-based applications in containers using methods other than ICM, see Running InterSystems IRIS in Containers.

You can also use the -image and -container command-line options with icm run to specify a different image and container name. This allows you to deploy multiple containers created from multiple images on each provisioned node by using the icm run command multiple times — the first time to run the images specified by the DockerImage fields in the node definitions and deploy the iris container (of which there can be only one) on each node, as described in the foregoing paragraphs, and one or more subsequent times with the -image and -container options to run a custom image on all of the nodes or some of the nodes. Each container running on a given node must have a unique name. The -machine and -role options can also be used to restrict container deployment to a particular node, or to nodes of a particular type, for example, when deploying your own custom container on a specific provisioned node.

Another frequently used option, -iscPassword, specifies the InterSystems IRIS password to set for all deployed InterSystems IRIS containers; this value could be included in the configuration files, but the command line option avoids committing a password to a plain-text record. If the InterSystems IRIS password is not provided by either method, ICM prompts for it (with typing masked).

For security, ICM does not transmit the InterSystems IRIS password (however specified) in plain text, but instead uses a cryptographic hash function to generate a hashed password and salt locally, then sends these using SSH to the deployed InterSystems IRIS containers on the host nodes.

Given all of the preceding, consider the following three examples of container deployment using the icm run command. (These do not present complete procedures, but are limited to the procedural elements relating to the deployment of particular containers on particular nodes.)

-

To deploy a distributed cache cluster with one DM node and several AM nodes:

-

When creating the defaults.json file, as described in Configuration, State, and Log Files and Define the Deployment, include the following to specify the default image from which to create the iris containers:

"DockerImage": "intersystems/iris:latest-em" -

Execute the following command on the ICM command line:

icm run -iscPassword "<password>"A container named iris containing an InterSystems IRIS instance with its initial password set as specified is deployed on each of the nodes; ICM performs the needed ECP configuration following container deployment.

-

-

To deploy a basic sharded cluster with mirrored DATA nodes and an AR (arbiter) node:

-

When creating the defaults.json file, as described in Configuration, State, and Log Files and Define the Deployment, include the following to specify the default image from which to create the iris containers and to enable mirroring (as described in Rules for Mirroring):

"DockerImage": "intersystems/iris:latest-em", "Mirror": "true" -

When creating the definitions.json file, override the DockerImage field in the defaults file for the AR node only by specifying the arbiter image in the AR node definition, for example:

{ "Role": "AR", "Count": "1", "DockerImage": "intersystems/arbiter:latest-em" } -

Execute the following command on the ICM command line:

icm run -iscPassword "<password>"A container named iris containing an InterSystems IRIS instance with its initial password set as specified is deployed on each of the DATA nodes; a container named iris containing an ISCAgent to act as mirror arbiter is deployed on the AR node; ICM performs the needed sharding and mirroring configuration following container deployment.

-

-

To deploy a DM node with a stand-alone InterSystems Iris instance in the iris container and an additional container created from a custom image, plus several WS nodes connected to the DM:

-

When creating the definitions.json file, as described in Configuration, State, and Log Files and Define the Deployment, specify the iris image for the DM node and the webgateway image for the WS nodes, for example:

{ "Role": "DM", "Count": "1", "DockerImage": "intersystems/iris-arm64:latest-em" }, { "Role": "WS", "Count": "3", "DockerImage": "intersystems/webgateway:latest-em" } -

Execute the following command on the ICM command line:

icm runICM prompts for the initial InterSystems IRIS password with typing masked, and a container named iris containing an InterSystems IRIS instance is deployed on the DM node, a container named iris containing an InterSystems Web Gateway installation and an Apache web server is deployed on each of the WS nodes, and ICM performs the needed web server configuration following container deployment.

-

Execute another icm run command to deploy the custom container on the DM node, for example either of the following:

icm run -container customsensors -image myrepo/sensors:1.0 -role DM icm run -container customsensors -image myrepo/sensors:1.0 -machine ANDY-DM-TEST-0001A container named customsensors created from the image sensors in your repository is deployed on the DM node.

-

Bear in mind the following further considerations:

-

The container name iris remains the default for all ICM container and service management commands (as described in the following sections), so when you execute a command involving an additional container you have deployed using another name, you must refer to that name explicitly using the -container option. For example, to remove the custom container in the last example from the DM node, you would issue the following command:

icm rm -container customsensors -machine ANDY-DM-TEST-0001Without -container customsensors, this command would remove the iris container by default.

-

The DockerRegistry, DockerUsername, and DockerPassword fields are required to specify and log in to (if it is private) the Docker registry in which the specified image is located; for details see Docker Repositories.

-

If you use the -namespace command line option with the icm run command to override the namespace specified in the defaults file (or the default of IRISCLUSTER if not specified in defaults), the value of the Namespace field in the instances.json file (see The Instances File) is updated with the name you specified, and this becomes the default namespace when using the icm session and icm sql commands.

Additional Docker options, such as --volume, can be specified on the icm run command line using the -options option, for example:

icm run -options "--volume /shared:/host" image intersystems/iris:latest-em

For more information on the -options option, see Using ICM with Custom and Third-Party Containers.

The -command option can be used with icm run to provide arguments to (or in place of) the Docker entry point; for more information, see Overriding Default Commands.

Because ICM issues Docker commands in multiple threads, the order in which containers are deployed on nodes is not deterministic. This is illustrated in the example that follows, which represents output from deployment of the sharded cluster configuration described in Define the Deployment. Repetitive lines are omitted for brevity.

$ icm run

Executing command 'docker login' on ANDY-DATA-TEST-0001...

...output in /Samples/AWS/state/ANDY-DATA-TEST/ANDY-DATA-TEST-0001/docker.out

...

Pulling image intersystems/iris:latest-em on ANDY-DATA-TEST-0001...

...pulled ANDY-DATA-TEST-0001 image intersystems/iris:latest-em

...

Creating container iris on ANDY-DATA-TEST-0002...

...

Copying license directory /Samples/license/ to ANDY-DATA-TEST-0003...

...

Starting container iris on ANDY-DATA-TEST-0004...

...

Waiting for InterSystems IRIS to start on ANDY-DATA-TEST-0002...

...

Configuring SSL on ANDY-DATA-TEST-0001...

...

Enabling ECP on ANDY-DATA-TEST-0003...

...

Setting System Mode on ANDY-DATA-TEST-0002...

...

Acquiring license on ANDY-DATA-TEST-0002...

...

Enabling shard service on ANDY-DATA-TEST-0001...

...

Assigning shards on ANDY-DATA-TEST-0001...

...

Configuring application server on ANDY-DATA-TEST-0003...

...

Management Portal available at: http://ec2-00-56-140-23.us-west-1.compute.amazonaws.com:52773/csp/sys/UtilHome.csp

At completion, ICM outputs a link to the Management Portal of the InterSystems IRIS instance running in the iris container on the DM node or, when a sharded cluster is deployed, to the Management Portal of the instance on data node 1, which is the lowest numbered, in this case ANDY-DATA-TEST-001. If the DM note or the sharded cluster is mirrored, the link is to the initial primary; however, if you define a load balancer (role LB) for the mirrored DM node or DATA nodes, the link is to the mirror-aware load balancer, and so will always be to the current primary.

Redeploying Services

To make the deployment process as flexible and resilient as possible, the icm run command is fully reentrant — it can be issued multiple times for the same deployment. When an icm run command is repeated, ICM stops and removes the affected containers (the equivalent of icm stop and icm rm), then creates and starts them from the applicable images again, while preserving the storage volumes for InterSystems IRIS instance-specific data that it created and mounted as part of the initial deployment pass (see Storage Volumes Mounted by ICM).

There are four primary reasons for redeploying services by executing an icm run command more than once, as follows:

-

Redeploying the existing containers with their existing storage volumes.

To replace deployed containers with new versions while preserving the instance-specific storage volumes of the affected InterSystems IRIS containers, thereby redeploying the existing instances, simply repeat the original icm run command that first deployed the containers. You might do this if you have made a change in the definitions files that requires redeployment, for example you have updated the licenses in the directory specified by the LicenseDir field.

-

Redeploying the InterSystems IRIS containers without the existing storage volumes.

To replace the InterSystems IRIS containers in the deployment without preserving their instance-specific storage volumes, you can delete that data for those instances before redeploying using the following command:

icm ssh -command "sudo rm -rf /<mount_dir>/*/*"where mount_dir is the directory (or directories) under which the InterSystems IRIS data, WIJ, and journal directories are mounted (which is /irissys/ by default, or as configured by the DataMountPoint, WIJMountPoint, Journal1MountPoint, and Journal2MountPoint fields; for more information, see Storage Volumes Mounted by ICM). You can use the -role or -machine options to limit this command to specific nodes, if you wish. When you then repeat the icm run command that originally deployed the InterSystems IRIS containers, those that still have instance-specific volumes are redeployed as the same instances, while those for which you deleted the volumes are redeployed as new instances.

-

Deploying services on nodes you have added to the infrastructure, as described in Reprovisioning the Infrastructure.

When you repeat an icm run command after adding nodes to the infrastructure, containers on the existing nodes are redeployed as described in the preceding (with their storage volumes, or without if you have deleted them) while new containers are deployed on the new nodes. This allows the existing nodes to be reconfigured for the new deployment topology, if necessary.

-

Overcoming deployment errors.

If the icm run command fails on one or more nodes due to factors outside ICM’s control, such as network latency and disconnects or interruptions in cloud provider service (as indicated by error log messages), you can issue the command again; in most cases, deployment will succeed on repeated tries. If the error persists, however, and requires manual intervention — for example, if it is caused by an error in one of the configuration files — you may need to delete the storage volumes on the node or nodes affected, as described in the preceding, before reissuing icm run after fixing the problem. This is because ICM recognizes a node without instance-specific data as a new node, and marks the storage volumes of an InterSystems IRIS container as fully deployed only when all configuration is successfully completed; if configuration begins but fails short of success and the volumes are not marked, ICM cannot redeploy on that node. In a new deployment, you may find it easiest to issue the command icm ssh -command "sudo rm -rf /irissys/*/*" without -role or -machine constraints to roll back all nodes on which InterSystems IRIS is to be redeployed.

Container Management Commands

The commands in this section are used to manage the containers you have deployed on your provisioned infrastructure.

Many ICM command options can be used with more than one command. For example, the -role option can be used with a number of commands to specify the type of node for which the command should be run — for example, icm inventory -role AM lists only the nodes in the deployment that are of type AM — and the -image option, which specifies an image from which to deploy containers for both the icm run and icm upgrade commands. For complete lists of ICM commands and their options, see ICM Commands and Options.

icm ps

When deployment is complete, the icm ps command shows you the run state of containers running on the nodes, for example:

$ icm ps -container iris

Machine IP Address Container Status Health Image

------- ---------- --------- ------ ------ -----

ANDY-DATA-TEST-0001 00.56.140.23 iris Up healthy intersystems/iris:latest-em

ANDY-DATA-TEST-0002 00.53.190.37 iris Up healthy intersystems/iris:latest-em

ANDY-DATA-TEST-0003 00.67.116.202 iris Up healthy intersystems/iris:latest-em

ANDY-DATA-TEST-0004 00.153.49.109 iris Up healthy intersystems/iris:latest-em

If the -container restriction is omitted, all containers running on the nodes are listed. This includes both other containers deployed by ICM (for example, Weave network containers, or any custom or third party containers you deployed using the icm run command) and any deployed by other means after completion of the ICM deployment..

Beyond node name, IP address, container name, and the image the container was created from, the icm ps command includes the following columns:

-

Status — One of the following status values generated by Docker: created, restarting, running, removing (or up), paused, exited, or dead.

-

Health — For iris, arbiter, and webgateway containers, one of the values starting, healthy, or unhealthy; for other containers none (or blank). When Status is exited, Health may display the exit value (where 0 means success).

For iris containers the Health value reflects the health state of the InterSystems IRIS instance in the container. (For information about the InterSystems IRIS health state, see System Monitor Health State). For arbiter containers it reflects the status of the ISCAgent, and for webgateway containers the status of the InterSystems Web Gateway web server. Bear in mind that unhealthy may be temporary, as it can result from a warning that is subsequently cleared.

-

Mirror — When mirroring is enabled (see Rules for Mirroring), the mirror member status (for example PRIMARY, BACKUP, SYNCHRONIZING) returned by the %SYSTEM.Mirror.GetMemberStatus()Opens in a new tabmirroring API call. For example:

$ icm ps -container iris Machine IP Address Container Status Health Mirror Image ------- ---------- --------- ------ ------ ------ ----- ANDY-DATA-TEST-0001 00.56.140.23 iris Up healthy PRIMARY intersystems/iris:latest-em ANDY-DATA-TEST-0002 00.53.190.37 iris Up healthy BACKUP intersystems/iris:latest-em ANDY-DATA-TEST-0003 00.67.116.202 iris Up healthy PRIMARY intersystems/iris:latest-em ANDY-DATA-TEST-0004 00.153.49.109 iris Up healthy BACKUP intersystems/iris:latest-emFor an explanation of the meaning of each status, see Mirror Member Journal Transfer and Dejournaling Status.

Additional deployment and management phase commands are listed in the following. For complete information about these commands, see ICM Reference.

icm stop

The icm stop command stops the specified containers (or iris by default) on the specified nodes, or on all nodes if no machine or role constraints provided). For example, to stop the InterSystems IRIS containers on the application servers in the distributed cache cluster configuration:

$ icm stop -container iris -role DS

Stopping container iris on ANDY-DATA-TEST-0001...

Stopping container iris on ANDY-DATA-TEST-0002...

Stopping container iris on ANDY-DATA-TEST-0004...

Stopping container iris on ANDY-DATA-TEST-0003...

...completed stop of container iris on ANDY-DATA-TEST-0004

...completed stop of container iris on ANDY-DATA-TEST-0001

...completed stop of container iris on ANDY-DATA-TEST-0002

...completed stop of container iris on ANDY-DATA-TEST-0003

icm start

The icm start command starts the specified containers (or iris by default) on the specified nodes, or on all nodes if no machine or role constraints provided). For example, to restart one of the stopped application server InterSystems IRIS containers:

$ icm start -container iris -machine ANDY-DATA-TEST-0002...

Starting container iris on ANDY-DATA-TEST-0002...

...completed start of container iris on ANDY-DATA-0002

icm pull

The icm pull command downloads the specified image to the specified machines. For example, to add an image to data node 1 in the sharded cluster:

$ icm pull -image intersystems/webgateway:latest-em -role DATA

Pulling ANDY-DATA-TEST-0001 image intersystems/webgateway:latest-em...

...pulled ANDY-DATA-TEST-0001 image intersystems/webgateway:latest-em

Note that the -image option is not required if the image you want to pull is the one specified by the DockerImage field in the definitions file, for example:

"DockerImage": "intersystems/iris-arm64:latest-em",

Although the icm run automatically command pulls any images not already present on the host, an explicit icm pull might be desirable for testing, staging, or other purposes.

icm rm

The icm rm command deletes the specified container (or iris by default), but not the image from which it was started, from the specified nodes, or from all nodes if no machine or role is specified. Only a stopped container can be deleted.

icm upgrade

The icm upgrade command replaces the specified container on the specified machines. ICM orchestrates the following sequence of events to carry out an upgrade:

-

Pull the new image

-

Create the new container

-

Stop the existing container

-

Remove the existing container

-

Start the new container

By staging the new image in steps 1 and 2, the downtime required between steps 3-5 is kept relatively short.

For example, to upgrade the InterSystems IRIS container on an application server:

$ icm upgrade -image intersystems/iris:latest-em -machine ANDY-AM-TEST-0003

Pulling ANDY-AM-TEST-0003 image intersystems/iris:latest-em...

...pulled ANDY-AM-TEST-0003 image intersystems/iris:latest-em

Stopping container ANDY-AM-TEST-0003...

...completed stop of container ANDY-AM-TEST-0003

Removing container ANDY-AM-TEST-0003...

...removed container ANDY-AM-TEST-0003

Running image intersystems/iris:latest-em in container ANDY-AM-TEST-0003...

...running image intersystems/iris:latest-em in container ANDY-AM-TEST-0003

The -image option is optional for the icm upgrade command. If you do not specify an image, ICM uses the value of the DockerImage field in the instances.json file (see The Instances File). If you do specify an image, when the upgrade is complete, that value is updated with the image you specified.

The major versions of the image from which you launch ICM and the InterSystems images you deploy must match. For example, you cannot deploy a 2022.2 version of InterSystems IRIS using a 2022.1 version of ICM. Therefore you must upgrade ICM before upgrading your InterSystems containers.

If you are upgrading a container other than iris, you must use the -container option to specify the container name.

For important information about upgrading InterSystems IRIS containers, see Upgrading InterSystems IRIS Containers.